日前,由我中心长聘教轨副教授冷静文(通讯作者)指导的上海交通大学电子信息与电气工程学院2020级博士研究生郭聪,作为第一作者发表题为“Balancing Efficiency and Flexibility for DNN Acceleration via Temporal GPU-Systolic Array Integration”的学术论文,该论文被Design Automation Conference 2020录用。

每年举行一届的DAC国际会议是集成电路芯片设计与辅助工具研究领域国际上最具影响力的学术会议,迄今已有超过50年的历史,每年的大会均有数千人参加,受到世界范围内该研究领域专家学者的广泛重视。DAC会议涵盖了包括,自动化设计,电子自动化设计,电子系统和软件,IoT,IP,机器学习/人工智能,信息安全等各个领域。

Balancing Efficiency and Flexibility for DNN Acceleration via Temporal GPU-Systolic Array Integration

Authors:Cong Guo,Yangjie Zhou,Jingwen Leng,Yuhao Zhu,Zidong Du,Quan Chen,Chao Li,Bin Yao,Minyi Guo

The research interest in specialized hardware accel erators for deep neural networks (DNN) spiked recently owing to their superior performance and effificiency. However, today’s DNN accelerators primarily focus on accelerating specifific “kernels” such as convolution and matrix multiplication, which are vital but only part of an end-to-end DNN-enabled application. Meaningful speedups over the entire application often require supporting computations that are, while massively parallel, ill-suited to DNN accelerators. Integrating a general-purpose processor such as a CPU or a GPU incurs signifificant data movement overhead and leads to resource under-utilization on the DNN accelerators.

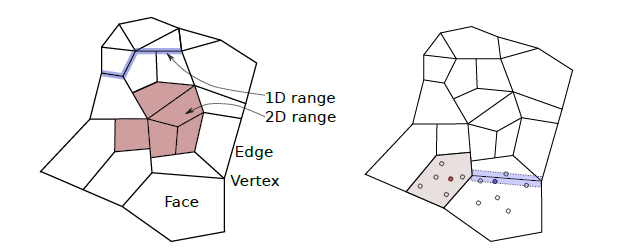

We propose Simultaneous Multi-mode Architecture (SMA), a novel architecture design and execution model that offers general-purpose programmability on DNN accelerators in order to accelerate end-to-end applications. The key to SMA is the temporal integration of the systolic execution model with the GPU-like SIMD execution model. The SMA exploits the common components shared between the systolic-array accelerator and the GPU, and provides lightweight reconfifiguration capability to switch between the two modes in-situ. The SMA achieves up to 63% performance improvement while consuming 23% less energy than the baseline Volta architecture with TensorCore.

论文相关链接:https://www.dac.com/content/2020-dac-accepted-papers

冷静文,上海交通大学约翰·霍普克罗夫特计算机科学中心助理教授。主要研究方向包括异构计算系统的并行化和性能、能效、可靠性方面的优化,以及针对深度学习应用进行大规模并行化和新型加速芯片设计。他于2016年12月毕业于德州大学奥斯汀分校电子与计算机工程系并获得博士学位,2010年7月毕业于上海交通大学,获得学士学位。其在博士期间主攻方向为GPU处理器的体系结构优化,目前主持一项自然科学基金青年基金(2017年)和多项合作课题,他也入选了2018年微软亚洲研究院青年学者铸星计划。

郭聪,上海交通大学电子信息与电气工程学院2020级博士研究生。主要研究方向包括新型人工智能芯片的设计和优化,针对深度学习应用大规模并行化和稀疏化。2020年毕业于上海交通大学并获得硕士学位,2016年毕业于深圳大学,获得学士学位。